Update 10-04-2017 :

So after running this is in test/pre-prod for some time, I realised a couple of problems with my initial configuration.

- My math was off. I was graphing two values, ‘bytes.fromcache.toclients’ and ‘bytes.fromorigin.toclients’

This is not correct, what we actually want is a value of ALL data sent to clients, this is a sum of 3x values, bytes.fromcache.toclients, bytes.fromorigin.toclients and bytes.frompeers.toclients

Then we can accurately see exactly how much data was served to client devices vs how much data was pulled from Apple over the WAN (bytes.fromorigin.toclients).

- The way I was importing data into InfluxDB was incorrect. I was importing each table from sqlite as a ‘measurement’ into influxdb.

This is not the way it should be done with influx, rather, we should create a ‘measurement’ and then add fields to this measurement.

The reason for this is mainly because Influx can not do any math between different measurements. i.e. it can’t join measurements or do a sum on measurements.

For example, if I want to do a query showing the sum of the three metrics I mentioned above, and these three metrics were different measurements in influxdb it would not work.

Luckily all that was required to fix these two issues was to simply adjust my logstash configuration.

Logstash is super flexible and allows for multiple inputs and outputs and basic if /then logic.

I have updated the post below to include the new information

Summary

So I have a lot of Apple Caching Servers to manage. The problem for me is monitoring their stats. How much data have they saved the site where they are located? Sure you can look at the Server.app stats and get a general idea. You could also look at the raw data from:

serveradmin fullstatus cachingYou could also use the fantastic script from Erik Gomez Cacher which can trigger server alerts to send email notifications as well as send slack notifications to provide you with some statistics of your caching server.

And this is all great for a small amount of caching servers, but once your fleet starts getting up into the 100+ territory, we really need something better. Management are always asking me for stats for this site and that site, or for a mixture of the sites, or a region, or all of the sites combined. Collecting this data and then creating graphs in excel with the above methods is rather painful.

There has to be a better way!

Enter the ILG stack (InfluxDB, Logstash and Grafana)

If you have had a poke around Server.app 5.2 caching server on macOS 10.12, you may have noticed that there is a Metrics.sqlite database in

/Library/Server/Caching/Logs/Lets have a look whats in this little database:

$ sqlite3 Metrics.sqlite

SQLite version 3.14.0 2016-07-26 15:17:14

Enter ".help" for usage hints.Lets turn on headers and columns

sqlite> .headers ON

sqlite> .mode columnNow lets see what tables we have in here

sqlite> .tables

statsData versionstatsData sounds like what we want, lets see whats in there.

sqlite> select * from statsData;

entryIndex collectionDate expirationDate metricName dataValue

---------- -------------- -------------- ----------------------- ----------

50863 1487115473 1487720273 bytes.fromcache.topeers 0

50864 1487115473 1487720273 requests.fromclients 61

50865 1487115473 1487720273 imports.byhttp 0

50866 1487115473 1487720273 bytes.frompeers.toclien 0

50867 1487115473 1487720273 bytes.purged.total 0

50868 1487115473 1487720273 replies.fromorigin.tope 0

50869 1487115473 1487720273 bytes.purged.youngertha 0

50870 1487115473 1487720273 bytes.fromcache.toclien 907

50871 1487115473 1487720273 bytes.imported.byxpc 0

50872 1487115473 1487720273 requests.frompeers 0

50873 1487115473 1487720273 bytes.fromorigin.toclie 227064

50874 1487115473 1487720273 replies.fromcache.topee 0

50875 1487115473 1487720273 bytes.imported.byhttp 0

50876 1487115473 1487720273 bytes.dropped 284

50877 1487115473 1487720273 replies.fromcache.tocli 4

50878 1487115473 1487720273 replies.frompeers.tocli 0

50879 1487115473 1487720273 imports.byxpc 0

50880 1487115473 1487720273 bytes.purged.youngertha 0

50881 1487115473 1487720273 bytes.fromorigin.topeer 0

50882 1487115473 1487720273 replies.fromorigin.tocl 58

50883 1487115473 1487720273 bytes.purged.youngertha 0

Well now this looks like the kind of data we are after!

Looks like all the data is stored in bytes, so no conversions from MB KB TB need to be done. Bonus.

Also looks like each stat or measurement i.e. bytes.fromcache.topeers appears to be written to this DB after, or very shortly after, a transaction or event occurs on the caching server such as a GET request for content from a device. This means that we can add all these stats up over a day and get a much more accurate idea of how much data the caching server is seeing.

This solves the problem that the Cacher script by Erik runs into when the server reboots.

In Cacher, the script looks for a summary of how much data the server has served since the service has started by scraping the Debug.log. You have probably seen this in the Debug log

2017-02-22 09:41:10.137 Since server start: 1.08 GB returned to clients, 973.5 MB stored from Internet, 0 bytes from peers; 0 bytes imported.Cacher then checks the last value of the previous day, compares it to the latest value for the end of report period day and works out the difference to arrive at a figure of how much data was served by the client for that report day. While this works great on a stable caching server that never reboots or has the service restart on you, it is a little too fragile for my needs. I’m sure Erik would also like a more robust method to generate that information as well.

Looking back at the Metrics.sqlite DB, if you are wondering about those collectionDates and expirationDate values they are epoch time stamps, which is also a bonus as this is very easy to convert into human readable with a command like:

$ date -j -f %s 1487115473

Wed 15 Feb 2017 10:37:53 AEDTBut also makes it easy to do comparisons and do simple math with if you need to.

Having all this information in a sqlite database already makes it quite easy-ish for us to pick up this data with Logstash, feed it into an InfluxDB instance and then visualise it with Grafana.

With this setup I was able to very easily show the statistics of all our caching servers at once. Of course we can also drill down into individual schools caching servers to reveal those results as well.

YAY PRETTY GRAPHS!

The nuts and bolts

So how do we get get this setup? Well this is not going to be a step by step walkthrough but it should be enough to get you going. You can then make your own changes for how you want to set it up in your own environment, everyones prod environment is a little different but this should be enough to get you setup with a PoC environment.

Lets start with getting Logstash setup on your Caching server.

Requirements:

- macOS 10.12.x +

- Server.app 5.2.x +

- Java8

- Java8JDK

- Java JVM script from the always helpful Rich and Frogor script here

Start by:

- Getting your caching server up and running.

- Install Java 8 and the Java 8 JDK.

- Run the JVM script

- Confirm that you have Java 8 installed correctly by running

java -versionfrom the command line

If all has gone well, you should get something like this back:

# java -version

java version "1.8.0_111"

Java(TM) SE Runtime Environment (build 1.8.0_111-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.111-b14, mixed mode)

Now we are ready to install Logstash.

- Download the latest tar ball from here: https://www.elastic.co/downloads/logstash

- Store it somewhere useful like

/usr/local- Extract the tar with

tar -zxvf logstash-5.2.1.tar.gz -C /usr/local - This will extract it into the

/usr/local directory for you

- Extract the tar with

Now we need to add some plugins, this is where it gets a little tricky.

If you have authenticated proxy servers, you are going to have a bad time, so lets pretend you don’t.

Installing Logstash plugins

First lets get the plugin that will allow Logstash to send output to InfluxDB

Run the logstash plugin binary and install the plugin logstash-output-influxdb:

$ cd /usr/local/logstash-5.2.1/bin

$ ./logstash-plugin install logstash-output-influxdb

Now we will install the SQLite JDBC connector that allows Logstash to access the sqlite db that caching server saves its metrics into.

- Download the sqlite-jdbc-3.16.1 plugin from here: https://bitbucket.org/xerial/sqlite-jdbc/downloads/

- Create a directory in our Logstash dir to save it, I like to put it in ./plugins

mkdir -p /usr/local/logstash-5.2.1/plugins

- Copy the sqlite plugin into our new directory

cp sqlite-jdbc-3.16.1 /usr/local/logstash-5.2.1/plugins

Ok we now have Logstash installed and ready to go! Next up we make a configuration file to do all the work.

Thinking about the InfluxDB Schema

Before we start pumping data into Influx, we should probably think about how we are going to structure that data.

I came up with a very basic schema that looks like this:

Here I have created 7 ‘measurements’ which then group together the metricnames from the caching server sqlite database

Because of the way math works in influxdb, this allows me to write a query like :

SELECT sum("bytes.fromcache.toclients") + sum("bytes.fromorigin.toclients") + sum("bytes.frompeers.toclients") as TotalServed FROM "autogen"."bytestoclients" WHERE "site_code" = '1234' AND $timeFilter GROUP BY time(1d)

This query will add the three metrics together to give a total of all bytes from cache, origin and peers to client devices.

Creating the configuration file

This is the most challenging part, and a huge shoutout goes the @mosen for all his help on this I definitely wouldn’t have been able to get this far without his help.

The configuration file we need contains three basic components, the inputs, a filter and the outputs.

The inputs

The input is where we are getting out data from, in our case its from the sqlite DB, so our input is going to be the sqlite jdbc plugin and we need to config it so that it knows what information to get and where to get it from.

Its pretty straight forward, and should make sense, but I’ll describe each item below

We are going to have an input for each measurement we want, this way we can write a sqlite query to get the metric names or sqlite tables we want to put into that metric.

For example the below input is going to get the following tables:

- bytes.fromcache.toclients

- bytes.fromorigin.toclients

- bytes.frompeers.toclients

- bytes.fromcache.topeers

- bytes.fromorigin.topeers

From the sqldatabase by running the query in the statement section.

Then we add a label to this input so that we can call it later and ensure the data from this input is put into the correct measurement in the influxdb output.

The label is set by using the type key in the input as you see below

input {

jdbc {

jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar"

jdbc_driver_class => "org.sqlite.jdbc"

jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite"

jdbc_user => ""

schedule => "* * * * *"

statement => "SELECT * FROM statsData WHERE metricname LIKE "bytes.%.toclients" OR metricname LIKE "bytes.%.topeers""

tracking_column => "entryindex"

use_column_value => true

type => "bytestoclients"

}

}

The Logstash documentation is pretty good and describes each of the above items, check out the documentation here

The only thing to really worry about here is the schedule this is in regular cron style format, with the current setting as above, Logstash will check that Metrics.sqlite database every minute and submit information to InfluxDB.

This is probably far to often for a production system, for testing its fine though as you will see almost instant results. But before you go to production you should consider running this on a more sane schedule like perhaps every hour or two or whatever suits your environment.

So in the completed logstash config file we will end up with a jdbc input for each sqlite statement or query we need to run to populate the 7 measurements we add to influx.

The filter

The filter is applied to the data that we have retrieved with the input, so here is where we are going to add some extra fields and tags to go with our data to allow us to use some logic to direct the right input to the right output in the logstash file as well as allow us to group and search our data based on which server that data is coming from

Think of these fields as a way to ‘tag’ the data coming from this caching server with information about which physical caching server it is.

In my environment I have 4 tags that I want the data to have that I can search on and group with. In my case:

region – This is the physical region of where the server is located

site_name – This is the actual name of the site

site_code – This is a unique number that each site is assigned

school_type – In my case this is either primary school or high school

We are going to use some logic here to add a tag to the inputs depending upon what the type is. We can’t use the ‘type’ directly in the output section, so we have to convert it into a tag and then we can send that to the output and we can do logic on that.

We also remove any unneeded fields such as collectiondata and expiration date with the date, match, remove_field section

Then we also add our location and server information by adding our tags region, site_name etc etc with the mutate function

filter {

if [type] == "bytestoclients" {

mutate {

add_tag => [ "bytestoclients" ]

}

}

date {

match => [ "collectiondate", "UNIX" ]

remove_field => [ "collectiondate", "expirationdate" ]

}

mutate {

add_field => {

"region" => "Region 1"

"site_name" => "Site Name Alpha"

"site_code" => "1234"

"school_type" => "High School"

}

}

}

Again the documentation from Logstash is pretty good to describe how each of these items works, check here for the documentation

The important parts above that you might want to modify are the fields that are added with the mutate section.

The output

Now we are getting closer, the output section is where we tell Logstash what to do with all the data we have ingested, filtered and mutated.

Again all of this is pretty straight forward, but theres a couple of things that I’ll talk about:

we are going to use an if statement to check if the data coming from our input contains a string in a tag.

For example, if the string ‘bytestoclients’ exists in a tag, then we should use a certain output.

This allows us to direct the inputs we created above to a specific output. Each output will have a measurement name and a list of fields (datapoint) that will be sent to influx

We have to list each metric name in the coerce_values section to ensure the data is sent as a float or integer because otherwise it will be sent as a string and this is no good for our math.

There is also an open issue on github with the influxdb output plugin where we can’t use the a variable to handle this. ideally we would simply be able to use something like

coerce_values => {

"%{metric name}" => "integer"

}

But unfortunately this does not work, and we must list out each metric name – like animal

output {

if "bytestoclients" in [tags] {

influxdb {

allow_time_override => true

host => "my.influxdb.server"

measurement => "bytestoclients"

idle_flush_time => 1

flush_size => 100

send_as_tags => [ "region", "site_code", "site_name", "school_type" ]

data_points => {

"%{metricname}" => "%{datavalue}"

"region" => "%{region}"

"site_name" => "%{site_name}"

"site_code" => "%{site_code}"

"school_type" => "%{school_type}"

}

coerce_values => {

"bytes.fromcache.toclients" => "integer"

"bytes.fromorigin.toclients" => "integer"

"bytes.frompeers.toclients" => "integer"

"bytes.fromorigin.topeers" => "integer"

"bytes.fromcache.topeers" => "integer"

}

db => "caching"

retention_policy => "autogen"

}

}

So the really only interesting things here is:

send_as_tags : This is where we send the fields we created in the mutate section to influx as tags. The trick here, which is barely documented if at all, is that we also need to specify them as data points.

data_points : Here we need to add our tags (extra fields we added from mutate) as datapoints to send to influxdb, we use the %{name} syntax just like we would use a $name variable in bash. This will then replace the variable with the content of the field from the mutate section.

retention_policy : This is the retention policy of the influx db, again documentation was a bit hard to find on this one, but the default retention policy is not actually called default as it seems to be mentioned everywhere, in fact the default policy is actually called ‘autogen’

Consult the InfluxDB documentation for more info

Completed conf file

So now we have those sections filled out we should have a complete conf file that looks somewhat like this:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| input { | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "bytes.%.toclients" OR metricname LIKE "bytes.%.topeers"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "bytestoclients" | |

| } | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "requests.%"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "requests" | |

| } | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "replies.%"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "replies" | |

| } | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "bytes.purged.%"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "bytespurged" | |

| } | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "bytes.dropped"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "bytesdropped" | |

| } | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "bytes.imported.%"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "bytesimported" | |

| } | |

| jdbc { | |

| jdbc_driver_library => "/usr/local/logstash-5.2.1/plugins/sqlite-jdbc-3.16.1.jar" | |

| jdbc_driver_class => "org.sqlite.jdbc" | |

| jdbc_connection_string => "jdbc:sqlite:/Library/Server/Caching/Logs/Metrics.sqlite" | |

| jdbc_user => "" | |

| schedule => "* * * * *" | |

| statement => 'SELECT * FROM statsData WHERE metricname LIKE "imports.%"' | |

| tracking_column => "entryindex" | |

| use_column_value => true | |

| type => "imports" | |

| } | |

| } | |

| filter { | |

| if [type] == "bytestoclients" { | |

| mutate { | |

| add_tag => [ "bytestoclients" ] | |

| } | |

| } | |

| if [type] == "bytespurged" { | |

| mutate { | |

| add_tag => [ "bytespurged" ] | |

| } | |

| } | |

| if [type] == "bytesimported" { | |

| mutate { | |

| add_tag => [ "bytesimported" ] | |

| } | |

| } | |

| if [type] == "imports" { | |

| mutate { | |

| add_tag => [ "imports" ] | |

| } | |

| } | |

| if [type] == "bytesdropped" { | |

| mutate { | |

| add_tag => [ "bytesdropped" ] | |

| } | |

| } | |

| if [type] == "replies" { | |

| mutate { | |

| add_tag => [ "replies" ] | |

| } | |

| } | |

| if [type] == "requests" { | |

| mutate { | |

| add_tag => [ "requests" ] | |

| } | |

| } | |

| date { | |

| match => [ "collectiondate", "UNIX" ] | |

| remove_field => [ "collectiondate", "expirationdate" ] | |

| } | |

| mutate { | |

| add_field => { | |

| "region" => "Region 1" | |

| "site_name" => "Site Name Alpha" | |

| "site_code" => "1234" | |

| "school_type" => "High School" | |

| } | |

| } | |

| } | |

| output { | |

| if "bytestoclients" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "bytestoclients" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "bytes.fromcache.toclients" => "integer" | |

| "bytes.fromorigin.toclients" => "integer" | |

| "bytes.frompeers.toclients" => "integer" | |

| "bytes.fromorigin.topeers" => "integer" | |

| "bytes.fromcache.topeers" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| if "bytespurged" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "bytespurged" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "bytes.purged.youngerthan1day" => "integer" | |

| "bytes.purged.youngerthan7days" => "integer" | |

| "bytes.purged.youngerthan30days" => "integer" | |

| "bytes.purged.total" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| if "bytesimported" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "bytesimported" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "bytes.imported.byhttp" => "integer" | |

| "bytes.imported.byxpc" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| if "imports" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "imports" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "imports.byhttp" => "integer" | |

| "imports.byxpc" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| if "bytesdropped" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "bytesdropped" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "bytes.dropped" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| if "replies" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "replies" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "replies.fromcache.toclients" => "integer" | |

| "replies.fromcache.topeers" => "integer" | |

| "replies.fromorigin.toclients" => "integer" | |

| "replies.fromorigin.topeers" => "integer" | |

| "replies.frompeers.toclients" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| if "requests" in [tags] { | |

| influxdb { | |

| allow_time_override => true | |

| host => "my.influxdb.server.com" | |

| measurement => "requests" | |

| idle_flush_time => 1 | |

| flush_size => 100 | |

| send_as_tags => [ "region", "site_name", "site_code", "school_type" ] | |

| data_points => { | |

| "%{metricname}" => "%{datavalue}" | |

| "region" => "%{region}" | |

| "site_name" => "%{site_name}" | |

| "site_code" => "%{site_code}" | |

| "school_type" => "%{school_type}" | |

| } | |

| coerce_values => { | |

| "requests.frompeers" => "integer" | |

| "requests.fromclients" => "integer" | |

| } | |

| db => "caching" | |

| retention_policy => "autogen" | |

| } | |

| } | |

| } |

Install the conf file

- Create a directory in our logstash dir to store our conf file

mkdir -o /usr/local/logstash-5.2.1/conf

- Create the conf file and move it into this new location

cp logstash.conf /usr/local/logstash-5.2.1/conf/

Running Logstash

Ok so now we have log stash all installed and configured, we need a way to get logstash running and using our configuration file.

Of course this is a perfect place to use a launch daemon. I won’t go into much depth as there are many great resources out there on how to create and use launchdaemons.

If you haven’t already go ahead and check out launchd.info

Here is a launchd that I’ve create already, just pop this into your /Library/LaunchDaemons folder give your machine a reboot and logstash should start running.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <?xml version="1.0" encoding="UTF-8"?> | |

| <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd"> | |

| <plist version="1.0"> | |

| <dict> | |

| <key>Label</key> | |

| <string>com.org.logstash</string> | |

| <key>ProgramArguments</key> | |

| <array> | |

| <string>/usr/local/logstash-5.2.1/bin/logstash</string> | |

| <string>-f</string> | |

| <string>/usr/local/logstash-5.2.1/conf/logstash.conf</string> | |

| </array> | |

| <key>RunAtLoad</key> | |

| <true/> | |

| <key>KeepAlive</key> | |

| <true/> | |

| </dict> | |

| </plist> |

Setting up InfluxDB and Grafana

There are lots and lots of guides on the web for how to get these two items setup, so I won’t go into too much detail. My preferred method of deployment for these kinds of things is to use Docker.

This makes it very quick to deploy and manage the service.

I’ll assume that you already have a machine that is running docker and have a basic understanding of how docker works.

If not, again there are tons of guides out there and it really is pretty simple to get started.

InfluxDB

You can get an influxdb instance setup very quickly with the below command, this will create a db called caching, you can of course give it any name you like, but you will need to remember it when we connect Grafana to it later on.

docker run -d -p 8083:8083 -p 8086:8086 -e PRE_CREATE_DB=caching --expose 8090 --expose 8099 --name influxdb tutum/influxdbYou should now have InfluxDB up and running on your docker machine.

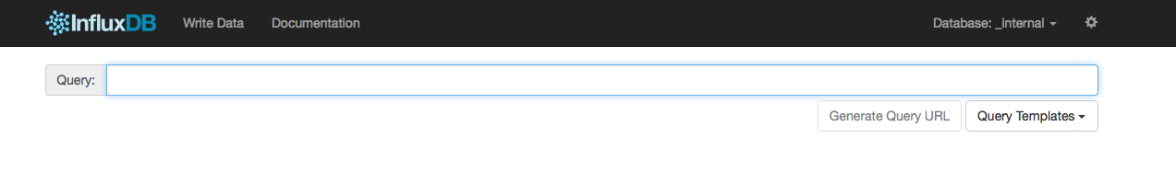

Port 8083 is the admin web app port and you can check your influxDB is up and running by pointing your web browser to your docker machine IP address on port 8083. You should then get your influx DB web app like this:

You can also setup Grafana on the same machine with the following command, this will automatically ‘link’ the Grafana instance to the InfluxDB and allow communication between the two containers.

docker run -d -p 3000:3000 --link influxdb:influxdb --name grafana grafana/grafanaNow you should also have a Grafana instance running on your docker machine on port 3000. Load up a web browser and point it to your docker machine IP address on port 3000 and you should get the Grafana web app like this:

The default login should be admin/admin

Login and add a data source

Setting up the dashboards

So now we get to the fun stuff, displaying the data!

Start by creating a new dashboard

Now select the Graph panel.

On the Panel Title select edit

Now we can get to the guts of it, creating the query to display the information we want

Under the Metrics heading, click on the A to expand the query.

From here it is pretty straight forward as Grafana will help you by giving you pop up menus of the items you can choose:

What might be a bit strange is that the FROM is actually the retention policy, which is weird, you might think that the FROM should be the name of the database. But no, its the name of the default retention policy which in our case should be autogen.

If you need to remove an item just click it and a menu will appear allowing you to remove it, heres an example of removing the mean() item

So to display some information you can start with a query like this:

This is going to select all the data from the database caching, with the retention policy of autogen, in the field called bytes.fromcache.toclients

Next we are going to select all of those values in that bytes.fromcache.toclients measurement, by telling it to select field(value)

Then we click plus next to the field(value) and from the aggregations menu choose sum() this will then add the values all together.

Then we want to display that total grouped by 1 day – time(1d)

This will show us how much data has been delivered to client devices, from the cache on our caching server in 1 day groupings.

Phew, ok thats the query done.

But, thats just going to show us how much data came from the “cache”, its not going to show us how much data was delivered to clients from cache+peers+origin.

So for that query, we have to do a little trick.

We select the measurement bytestoclients.

Then we select the field bytes.fromcache.toclients click the plus and add our other fields to it looks like this:

But you might notice that this doesn’t show us a single bar graph like we want, we have to manually edit the query to remove some erroneous commas

Hit the toggle edit mode button:

And then remove the commas and add a plus symbol instead.

from:

to:

Now we need to format the graph to look pretty. Under the Axes heading we need to change the unit to bytes.

Under the Legend heading, we can also add the Total so that it prints the total next to our measurement on our graph.

And to finish it off we will change the display from lines to bars. Under the Display heading check bars and uncheck lines.

Almost there.

From the top right, lets select a date range to display, like the this week for example.

AND BOOM!

You can of course change the heading from Panel Title to something more descriptive, add your own headings and axis titles etc etc

Of course you can also add additional queries to the graph so you can see multiple measurements at once for comparison.

For example we might want to see how much data was sent to clients from, and how much data had to be retrieved from Apple

We just add another query under the metrics heading.

So lets add the data from the bytes.fromorigin.toclients field

We can also use the WHERE filter to select only the data from a particular caching server rather than all of the caching server data that is being shown above.

That should be enough to get you going and creating some cool dashboard for your management types.

Any idea on where the Metrics database moved to in High Sierra’s Content Caching feature?

LikeLike

Sorry mate no idea, no longer working with MacOS

LikeLike